The Critical Layer in LLM Apps Large language models are extraordinary reasoning engines. They can parse language, follow instructions, and generate code or prose with fluency that feels almost human. But in practice, especially inside engineering teams and enterprises, raw intelligence isn’t enough.

A model that isn’t grounded in your systems, decisions, or workflows will often guess. Some guesses may seem plausible, others less so, but they’re rarely something you can count on.

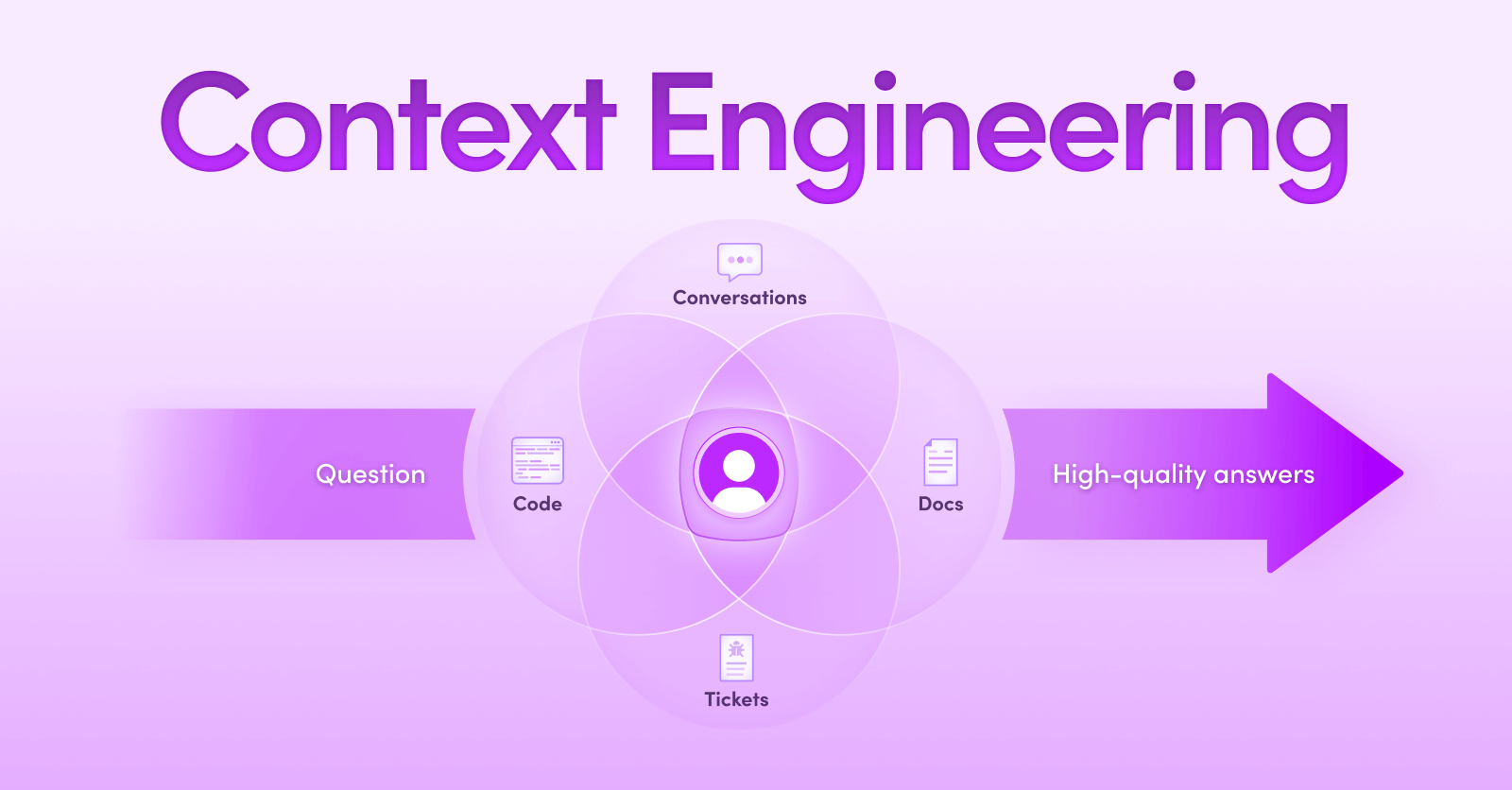

That’s where context engineering comes in. It’s not about tweaking prompts or adding clever instructions. It’s about making sure the right model sees the right information at the right time , so its reasoning is grounded in reality, not guesswork.

At Unblocked, we think of context engineering as the foundation of every serious AI application. It’s the deliberate practice of designing, structuring, and managing the information, memory, and environment an AI system relies on. This is how fragmented knowledge, code, docs, conversations, and metadata are transformed into coherent input the model can reason over.

Done right, it turns a general-purpose model into a system that understands your environment with precision and continuity.

Prompt Engineering vs. Context Engineering Prompt engineering is about telling a model how to behave. You give it tone, structure, and rules of engagement:

“Answer concisely.” “Use JSON format.” “Adopt a professional tone.” It’s useful, but limited. Prompting doesn’t add knowledge, it only shapes how the model uses whatever it already knows.

Context engineering solves the other half of the problem: making sure the model has the right information in the first place. It’s not about style, it’s about substance. Context engineering grounds a model’s reasoning in your actual environment (your code, your decisions, your systems) so it responds with precision instead of guesswork.

Dimension

Prompt Engineering

Context Engineering

Goal Influence behavior

Ground model in accurate context

Inputs Static handcrafted text

Dynamically retrieved, filtered, and formatted content

State Stateless

Stateful (short- and long-term memory)

Scalability Brittle at scale

Modular, compressible, memory-aware

Access Control N/A

Built-in permissions and filtering

At Unblocked, prompt engineering is just one small layer of our solution. To achieve over 98% accuracy, we dynamically pull context from docs, issue trackers, and conversations so the model sees the most relevant, permission-safe knowledge for each query.

So What Is "Context" Exactly? When we talk about “context” in AI systems, we don’t just mean the words in a single prompt. We mean the full substrate of information a model needs to reason well: the documents, conversations, code, metadata, and even the tacit knowledge that shape decisions.

For practical purposes, context includes:

Immediate signals : the user’s query, recent conversation history, and current tasks.Persistent knowledge : docs, issue trackers, codebases, structured data. Memories : both short-term (what was said a few minutes ago) and long-term (preferences, recurring goals, tool usage).Organizational patterns : best practices, methods of working, conventions baked into processes. Human factors : team relationships, decision history, and the unwritten rules that shape collaboration.Delivering that context isn’t a copy-paste job. It’s a real-time orchestration problem. Data must be retrieved, filtered, compressed, and ranked before being packed into the model’s context window. Some of it (like conversations) needs summarization to fit token limits. Other elements (like long-term preferences) need persistence so the model “remembers” what matters.

Why it matters Imagine a subtle bug spanning multiple services:

A Jira ticket exists but is missing key details. Engineers diagnosed the issue in Slack, trading insights and proposing fixes. The codebase hasn’t been updated yet. If an AI assistant only sees the code, it might reinforce the bug or miss the nuance entirely. With context engineering, those Slack discussions and Jira signals are surfaced alongside the code, so the model understands not just what the system does, but what’s wrong with it and what’s already been considered .

That’s the essence of context: assembling the right mix of signals so the model can reason with depth, precision, and continuity.

Why Building a Context Engine Is Hard Context matters, but the real challenge is ensuring every answer a model gives is based on the right evidence, for the right user, at the right time. That is not trivial. Here are a few of the challenges:

Access control: Every piece of data must be checked at retrieval and assembly. If a user cannot see a Slack channel, Jira ticket, or GitHub repo in the source system, it cannot appear in the model’s context. Enforcing those checks dynamically, across systems, was something we built into Unblocked from day one.Relationships in data: Knowledge is not isolated. Code connects through call graphs. That same code is discussed in Slack, referenced in Jira, and explained in docs. Questions often span all of these at once. Capturing and retrieving those links is critical for reasoning.Conflicting sources: Artifacts disagree. A Slack thread may capture a new decision while an old runbook documents an outdated one. The engine must balance recency against authority..Token constraints: Models see only a limited context window. That forces scoring, compressing, and summarizing without losing the details that change decisions.Memory management: Short-term memory (conversation history) and long-term memory (preferences, recurring goals) both matter, but they must be filtered and re-summarized to avoid drift and overload.Latency vs. completeness: Context must be broad enough to surface subtle signals, but fast enough for real-time use. The balance depends on the interaction. For example, in async tasks like code reviews, precision is worth extra processing time.Each of these challenges is tough on its own. Solving them together was the only way to deliver a context engine that actually works for engineering teams.

How We Built the Unblocked Context Engine Each of the challenges above shaped how our pipeline works. We broke the problem down into eight stages, each designed to make context precise, permission-safe, and efficient at inference time.

Data Preparation and Ingestion We continuously sync data from GitHub, Jira, Slack, Confluence, and other systems. Every artifact is preprocessed with embeddings, metadata tagging, and access control propagation so retrieval is fast and permission-aware.Learning the Environment Before retrieval begins, the system builds an understanding of the organization: who people are, what they work on, the structure and history of projects, coding and review practices, and workflows. This “institutional map” helps interpret queries in context, so results reflect not just raw data but how the team actually operates.Persistent Context (Short- and Long-Term Memory) The system captures and loads memory. Short-term memory includes recent conversations. Long-term memory preserves recurring preferences and assumptions. Both are filtered, scored, and compressed so the model remembers what matters without drifting or bloating.Understanding the Working Context We identify what the user is actively engaged with: repos, issues, reviews, teammates. This “work graph” sculpts the search space, reducing noise and surfacing what is relevant in the moment.Interpreting Intent Intent can sometimes be read directly from the question, but often it depends on context. For example, the query “Is this issue resolved?” might mean debugging if the user is looking at a failing test, status checking if they are reviewing a Jira ticket, or knowledge gathering if they are reading a Slack thread. By combining the query with the working context, the system classifies intent accurately and retrieves the information that matches what the user is trying to do.First-Pass Retrieval Using the scoped graph and intent, we pull candidate context across systems: Slack threads, PRs, Jira tickets, docs. Retrieval combines lexical and semantic search, link hydration, and metadata filtering, with access controls applied throughout.Exploring Relationships Context does not live in silos. A Jira issue links to a PR, which links to a Slack discussion, which links back to code. We traverse these relationships in real time to assemble a connected view, not just a list of fragments.Rank, De-conflict, and Refine Finally, we rank and refine. Conflicts are resolved by recency, authority, and graph proximity. Redundancies are removed. The top-ranked context is compressed and formatted into a coherent, model-ready window, with provenance attached for traceability (author, date, score, source, link, and more).This pipeline is what lets Unblocked turn scattered, inconsistent knowledge into precise, permission-safe context. The best way to understand it is to see how it works on real engineering problems.

Beyond MCP: Agentic Coding with Unblocked’s Context Engine Unblocked’s context engine is not limited to chat or search. It also powers agentic coding tools. Through our MCP server, assistants like Claude and Cursor get real-time access to a complete context picture: Jira tickets, Slack conversations, PR history, documentation, and more.

Unlike individual MCP tools, which only see their own narrow slice, Unblocked brings everything together. This means it can surface the “unknown unknowns” an assistant would not even know to look for, filling in missing links automatically and giving the model the complete view it needs to act effectively.

Example : “Help me solve this issue.” A user pastes a Jira link. The ticket has some surface-level detail, but the real engineering discussion happened weeks ago in Slack. No direct link exists between the two.

A conventional system would stop at the Jira ticket. Cursor or Claude Code alone cannot know that Slack context exists. The assistant would not even know to look for it, because individual MCP tools only see their own slice of data.

Unblocked’s context engine closes that gap. It treats the Jira ticket as a signal, hydrates it, extracts metadata, and runs retrieval across the organizational graph. That uncovers adjacent activity: related PRs, Slack discussions, even comments from teammates who worked on the same systems.

We then rank results by proximity to the user’s work: repos they contributed to, systems they modified, terms that match their focus. The final context window spans both the formal artifact (the Jira ticket) and the informal, high-signal discussion (the Slack thread that explains the real root cause and proposed fix).

The difference is completeness. Unblocked does not just retrieve what is obvious. It surfaces the critical context an assistant would not know to ask for, so the model receives the full picture: what the bug is, how it was discovered, what has been tried, and where the risks lie.

Example: “Optimize the Source Mark Calculator” A developer asks Cursor:

“Optimize the Source Mark Calculator.” Cursor analyzes the code, but it does not understand the history behind it. Prior work on performance has already been done. Possible optimizations were debated in Slack. A Jira ticket outlined which approaches were viable and which were ruled out. Without that context, Cursor thrashes, focusing on superficial tweaks or re-proposing ideas the team already explored and rejected.

Unblocked’s context engine changes this. When the request comes in, it does not stop at the code. It pulls in the complete context: prior PRs, the Slack thread where engineers debated tradeoffs, and the Jira ticket describing planned optimizations. That history is assembled into a coherent view and supplied to Cursor.

This is what siloed MCP tools cannot do. A Jira MCP can show the ticket, a Slack MCP can show the thread, and a GitHub MCP can show the code, but none of them can assemble the full picture or surface the “unknown unknowns” the agent would not think to ask for. Unblocked bridges those silos automatically, giving coding assistants the comprehensive, permission-safe context they need to act effectively.

Now, instead of chasing dead ends, Cursor can build directly on the team’s prior reasoning. It knows what has already been tried, what was discarded, and where the real opportunities lie.

Closing Thoughts Context engineering is not a layer you add on top of LLMs, it is the core of making them useful.

It is what separates tools that appear intelligent from systems that actually deliver reliable, grounded answers. Bigger models and cleverer prompts alone will not close that gap. Only the right context, assembled at the right time, can

At Unblocked, this is the work we do every day.

We take scattered organizational knowledge and transform it into coherent, permission-safe insight that models can use with precision and continuity.

This is not an add-on. It is the backbone of trustworthy AI for engineering teams, and it is how we believe the next generation of developer tools will be built.